[Revisited] Trust & Safety is how platforms put values into action

I'm Alice Hunsberger. Trust & Safety Insider is my weekly rundown on the topics, industry trends and workplace strategies that trust and safety professionals need to know about to do their job.

This week, I'm heading out on my fourth and final trip of the last six weeks – this time to Harvard's Radcliffe Institute for an exploratory seminar on Online Civic Trust in Climate Change. Part of the goal of the seminar is to help create resources for T&S professionals, so I'm excited to take part and report back.

You can also catch me on Wednesday, where I'm speaking on a webinar organised by Thorn about ethical AI for T&S. Register if you haven't already.

For today's newsletter, I'm resharing a post from last year which I feels very relevant again. Get in touch if you're as or if you have questions about today's . Here we go! — Alice

Results from the Lantern financial sector pilot are in: six companies, nearly 2,000 signals shared, and 100+ investigations into financially motivated child sexual exploitation and abuse. The takeaway? Cross-sector collaboration works.

Lantern is now open to eligible U.S.-based financial institutions, alongside global tech companies, enabling participants to share critical threat intelligence, uncover new patterns, and better protect children online - together.

Why platforms need to be more explicit about their values (updated for 2025)

The original version of this post was shared almost 18 months ago. just after I started writing T&S Insider. A lot has changed since then and, with AI companies launching social networks with minimal safeguards and T&S policies at various platforms changing with the political winds, I thought it was worth revisiting. I've updated some of the references but the core argument is the same. Enjoy — Alice.

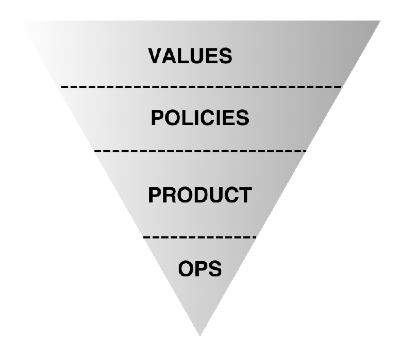

The practice of Trust & Safety is, in its broadest definition, how companies put their values into action. It’s not just what they say, but what they actually do.

A lot of emphasis is put on the act of content moderation — as demonstrated by the discussion around Charlie Kirk's death — but content moderation is just one part of a larger T&S strategy designed to reinforce specific company values. Each one is an editorial decision (see: the argument made by the platforms in the NetChoice cases) that shapes what the platform feels like and the types of people that want to spend time there.

Most of you will be familiar with how the process works but, for those who don't, it goes something like this:

- Platforms start with defining their particular values then write policies that are aligned with those values

- They create product features and interventions to reinforce those policies

- Then they finally resort to operations — often human moderators — to enforce the policies

It is through this process that companies must decide how they balance the sometimes competing values of privacy, safety, and self-expression.